import requests

from bs4 import BeautifulSoup

import pandas as pd

import re

def crawl_orgchart_main(url):

# 요청 및 응답 확인

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36"

}

response = requests.get(url, headers=headers)

soup = BeautifulSoup(response.text, "html.parser")

# 데이터 저장 리스트

data = []

# "org_list" 내부 정보 추출

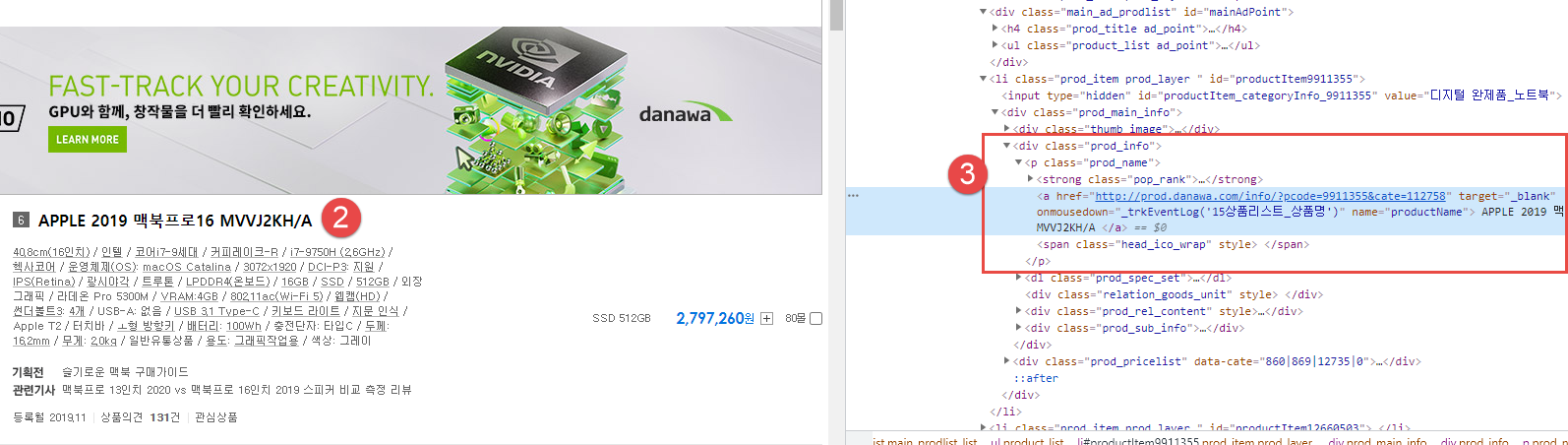

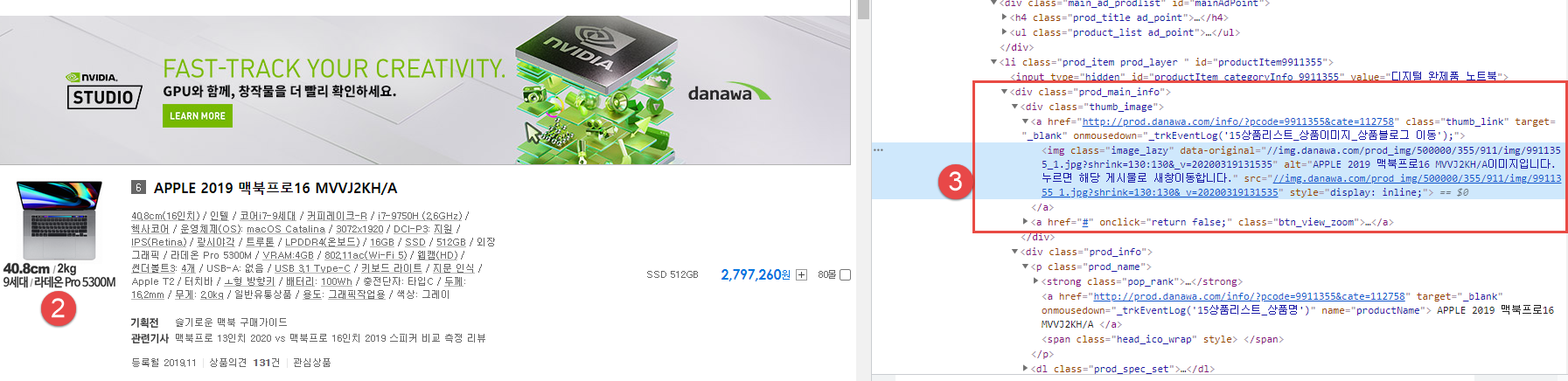

org_list = soup.select(".org_list ul li a")

for org in org_list:

name = org.find("em").get_text(strip=True) if org.find("em") else ""

name = re.split("\\d", name)[0].strip() # 숫자 제거하여 이름만 추출

duty = org.find("span").get_text(strip=True) if org.find("span") else ""

phone = org.find("p").get_text(strip=True) if org.find("p") else ""

position = ""

if name and duty:

data.append([duty, position,phone, name])

# "modal-table" 내부 정보 추출 (부구청장, 비서실 등)

modal_tables = soup.select(".modal-table tbody tr")

for row in modal_tables:

cols = row.find_all("td")

if len(cols) >= 3:

name = cols[0].get_text(strip=True) # 이름 => 부서명

duty = cols[1].get_text(strip=True) # 담당업무

phone = cols[2].get_text(strip=True) # 연락처

position = ""

data.append([duty, position,phone, name])

# 데이터프레임 생성

columns = ["부서명", "직위", "전화번호","담당업무"]

df = pd.DataFrame(data, columns=columns)

return df

def crawl_orgchart(url):

# 요청 및 응답 확인

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36"

}

response = requests.get(url, headers=headers)

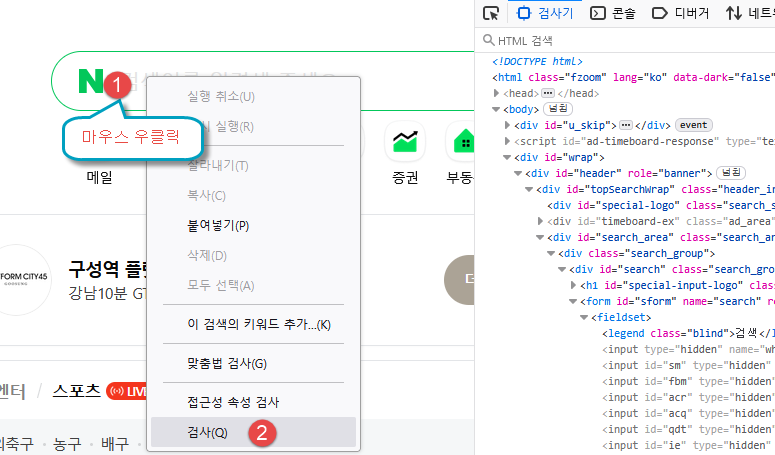

soup = BeautifulSoup(response.text, "html.parser")

# 데이터 저장 리스트

data = []

# "organization" 내부 정보 추출

organization= soup.select_one(".organization ul li")

if organization:

department = organization.find("dt").get_text(strip=True) if organization.find("dt") else ""

name_position_parts = organization.find("dd").get_text(strip=True, separator=" ").split(" ") if organization.find("dd") else []

name_position = name_position_parts[1] if len(name_position_parts) > 1 else name_position_parts[0] if name_position_parts else ""

# name_position = organization.find("dd").get_text(strip=True, separator=" ").split(" ")[1] if organization.find("dd") else ""

phone_tag = organization.select_one(".telnum a")

phone = phone_tag.get_text(strip=True) if phone_tag else ""

duty_position_parts = organization.find("dd").get_text(strip=True, separator=" ").split(" ") if organization.find("dd") else []

duty = " ".join(duty_position_parts[:2]) if len(duty_position_parts) > 1 else duty_position_parts[0] if duty_position_parts else ""

data.append([department, name_position, phone, duty])

departments = soup.select(".text_area.depart-team .mb5 .table_type1 tbody tr th")

tables = soup.select(".table_type1.mt20")

# 테이블 데이터 추출

for dept, table in zip(departments, tables):

department_name = dept.text.strip()

table_rows = table.select("tbody tr")

for row in table_rows:

columns = row.find_all("td")

if len(columns) >= 3:

position = columns[0].get_text(strip=True) # 직위

duty = columns[1].get_text(strip=True) # 담당업무

phone = columns[2].get_text(strip=True) # 행정전화번호

data.append([department_name, position, phone, duty])

columns = ["부서명", "직위", "전화번호","담당업무"]

df = pd.DataFrame(data, columns=columns)

return df

if __name__ == "__main__":

url_main = "https://www.jongno.go.kr/Main.do?menuId=1917&menuNo=1917"

df_main = crawl_orgchart_main(url_main)

if not df_main.empty:

print(df_main)

df_main.to_csv("종로구청_main.csv", index=False, encoding="utf-8-sig")

urls = [

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002110000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30001900000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002340000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002350000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002360000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002430000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002440000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002450000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002460000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002470000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002480000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002490000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002500000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002510000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002520000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002530000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002540000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002710000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002720000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002730000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002740000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002750000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002760000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002770000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002780000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002790000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002550000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002560000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002570000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002580000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002590000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002600000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002800000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002810000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002820000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002830000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002310000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30001530000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30000370000",

"https://www.jongno.go.kr/portal/deptGuidance.do?menuId=1892&deptId=30002320000",

]

# 모든 URL에서 데이터 크롤링

dataframes = [crawl_orgchart(url) for url in urls]

# 데이터프레임 병합

df_combined = pd.concat(dataframes, ignore_index=True)

# 두 데이터프레임을 합치기

if not df_combined.empty:

print(df_combined)

df_combined.to_csv("종로구청.csv", index=False, encoding="utf-8-sig")